How (not) to cool a video card

Note: I really don’t like pointing fingers at people about how they did something wrong. This was however too perfect a textbook case to pass by 🙂

|

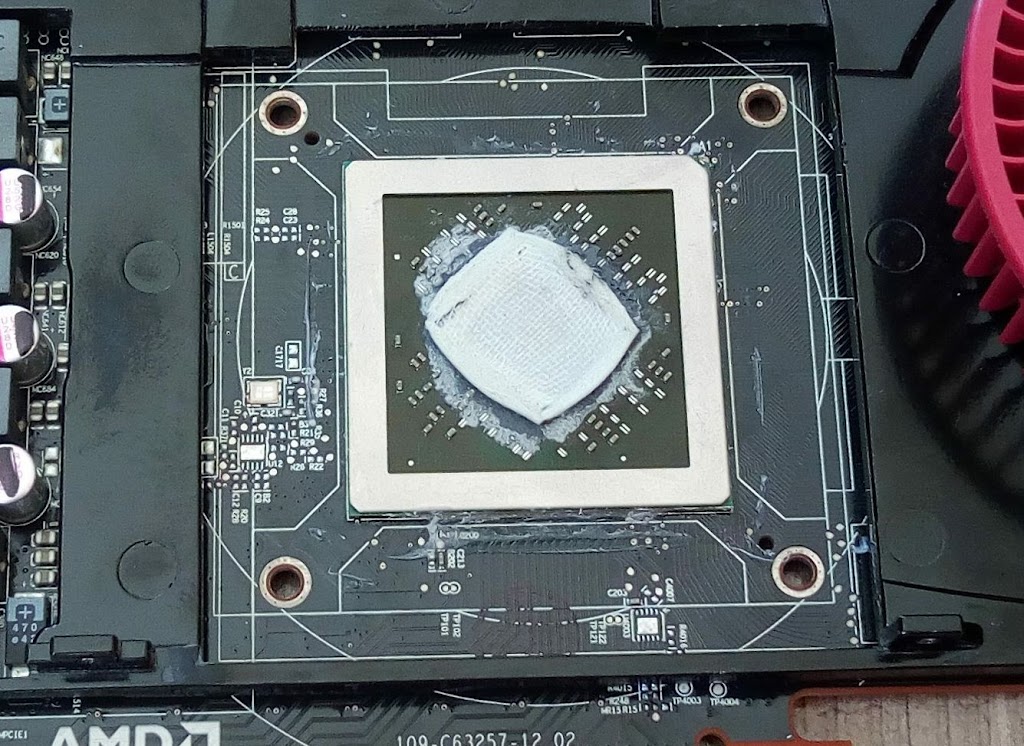

Radeon R9 270 with a thermal pad. Priceless.

|

So, a few days ago I got a used Radeon R9 270 from Ebay .

The card was sold as faulty – the previous owner reported it overheating to 100+ °C as soon as it booted and loaded Chrome.

(Upon receiving the card it turned out it wouldn’t even POST, but that is another story. An impending bake looming on the horizon.)

Here is what happened IMO:

The guy apparently decided that the card was getting too hot.

He decided to replace the thermal paste and the pads.

And the interesting part:

He replaced the thermal paste with a pad, and the pads with thermal paste.

What can I say, he gets bonus points for creativity.

Now, for all of you thermal pad fans out there – I agree that pads can be a great solution with crude heat sinks on a ~15 Watt laptop GPU. But let’s be honest, there is a huge difference between underpowered laptop GPUs and a 150W desktop video card (though 150W in this case is for peak board consumption, the GPU itself doing around up to 60 Watts).

A thermal paste provides much better heat transfer, even if both the paste and the pad are rated at, say, 6 W·m−1·K−1.

And here is why – pure copper provides 401 W·m−1·K−1 of thermal conductivity (link), which is about 50+ times greater than any thermal pastes or pads. That said, the point is not to wrap the whole thing in thermal grease and pads, but to provide the most direct possible contact between a chip and a heat sink, minimizing the thickness of intermediaries between the two.

Quality copper heat sinks on modern video cards allow extremely tight fit on the GPU, so they only require a tiny amount of thermal compound to fill the microscopic void caused by microscopic irregularities of the contact surfaces.

Throw that fat loaf of a thermal pad in between and you add far thicker layer of low thermal conductivity material, hampering heat transfer to the heat sink. 50 times thicker? 100 times thicker? Quite a lot, at any rate.

If you put a thermal pad on a desktop GPU, it is pretty much doomed to constalt overheating.

|

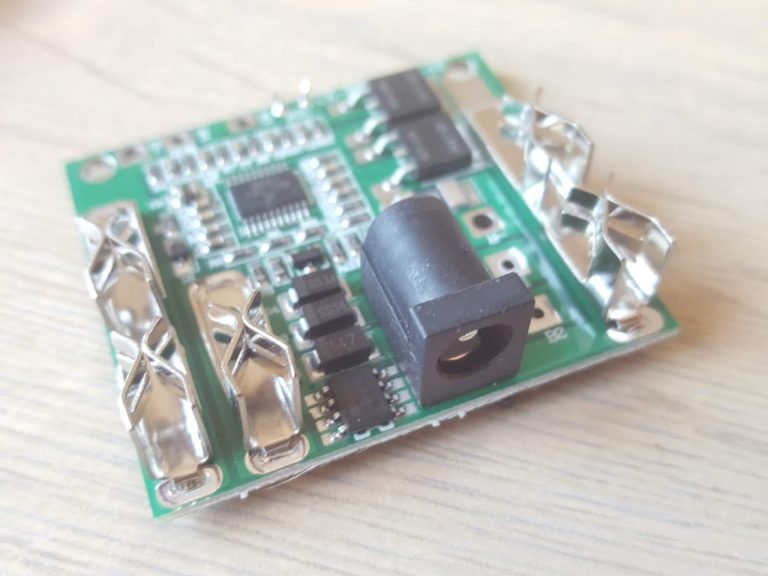

That mightiness of a heat sink.

|

Thermal pads, on the other hand, are for places where heat sink fits are designed with high tolerances, or just with outright fix 1-2 mm clearance. In this case you just can’t achieve a tight fit without a corresponding 1-2 mm pad.

Speaking of which, our R9 270 again serves us as a very fine little example of how not to do things.

Just look at those lumps of wasted thermal paste. The guy probably wasted half a syringe on the memory modules and the MOSFETs, only to have it barely connect the heat sinks.

First, the paste is not tight at all and it is anything but secure hanging there loosely.

Second, the thermal conductivity if definitely not optimal, as the contact surfaces are too small and there is no pressure.

|

Whole lotta thermal paste

|

So in this particular case I would be quite certain to say that the owner himself killed the GPU with that thermal pad. It wasn’t AMD, it wasn’t a faulty heat sink or weak BGA solder or anything of the sort.